# Inverted Pendulum

To finish Neil's [maths class](http://fab.cba.mit.edu/classes/864.20/index.html) and to walk myself through some control basics, I'm going to try to control an inverted pendulum system.

## TL;DR:

**What are you asking?**

To what extent can we replace classic 'ideal' controllers with search algorithms? In this case, for an inverted pendulum-on-a-cart system.

**Whose done what before?**

Most approaches use LQR controllers that find ideal control laws given a model of the system. LQR 'state space' controllers can perform pendulum swing-ups as well as balance upright pendulums, PID controllers can only do the latter.

**What did you do?**

I built a small simulation in JavaScript. I controlled with with a hand-tuned PID controller before developing a state-space controller for the same, and hand-tuned the control law.

I then tried various search algorithms to find an ideal control law without any model or derivative information.

A simplex method that evaluated control laws over a fixed time interval, was prone to local minima, and was only remotely successful when given starting conditions within the neighbourhood of my hand-tuned control law.

A simplex method that evaluated, at each simulation step, possible new control laws for a fixed horizon, performed slightly better, but still tracked into local minima, and developed control laws that minimized errors in the short horizon, but could not find ideal controllers for the full state space.

I then developed a local search method: at each time step it evaluates control laws in the neighbourhood of the currently-implemented control laws, and forecasts their performance at a fixed interval into the future. It picks the best one, and proceeds to the next simulation step, repeating.

**How did you do it?**

JavaScript.

**What questions did you need to answer?**

What does it mean to make a model-predictive controller?

What is 'state space' control?

**What did you learn?**

State space control: neat.

Computing is much faster than *most* of the systems I would be interested in 'low-level' control, so forecasting one- or two- seconds into the future is possible to do thousands of times per second.

Priors are hard to eliminate.

**What are the implications for future work?**

I think this is an interesting approach to control, especially if we do not want to work very hard making perfect models, or designing controllers. It is computationally *much* more expensive than an analytically produced ideal controller, but in simple systems is very tractable.

To bring this into hardware, my first-next-step is to run a similar search routine over model parameters, aligning them with real-world observations. I can then do expensive forecast-based search offline, while using best-forecast control laws online.

## The Simulation

One first step is building an ODE simulation of a pendulum, and rendering that. I can do this pretty easily by pulling code from my earlier javascript pendulum, just moving the platform left/right instead of up/down. I should hope to build an understanding also for simulation time / weight / units relate to world units, so that I can match it against the world.

OK, to start, the pendulum itself can be modelled with:

```math

\ddot{\theta} = \frac{g}{l}\sin \theta

```

Where $`\theta`$ is the angle of the pendulum, $`g`$ is gravity, and $`l`$ is the length of the pendulum.

This makes sense: the angular acceleration is equal to the force on the pendulum, which is a function of gravity, the length of the stick, and its current angle. But what of the cart?

```math

l\ddot{{\theta}} - g\sin\theta = \ddot{x}\cos\theta

```

Where $`x`$ is the position of the cart. This also makes sense! The acceleration of the cart ($`\ddot{x}`$) is related to the horizontal component of the angular acceleration of the pendulum.

In my tiny simulation, I'm going to drive the cart acceleration: I'll do this in hardware as well, so I should be able to expect some $`\ddot{\theta}`$ given an $`\ddot{x}`$

```math

\ddot{{\theta}} = \frac{\ddot{x}\cos\theta + g\sin\theta}{l}

```

I'm pretty sure this is enough to let me write some javascript. Since time is cheap (i.e. computing) and this is simple, I can even default to ahn euler method.

Yeah, this is fine... here's the free swinging, obviously we are used to the real world being *damped*

I'll add keys... ok, just want to get a clip of nearly balancing this human-in-the-loop... it's tough, time is not real yet either:

OK, for time, I'll use JS timers... Got it, running in realtime now. OK.

cool, this works, and euler probably OK because we are already running in realtime. if anything, do better at JS handoffs, events, protocol, etc. control.

## PID Control in Simulation

I've tooled around with PID now, so I can adjust these parameters by hand, next up is search for PID params.

## Search for PID

Autotuning is a world. In fact, with PID being the duct-tape holding many feedback controllers together, having a robust tuning algorithm for PID might be the spiritual equivalent of free duct tape for life: it means you won't make many elegant things, but the things you do make, will work.

[1999: Automatic PID Tuning w/o a Plant Model](lit/1999_automatic-pid-tuning-unfalsified_jun-safanov.pdf)

This paper proposes a method where all we need to tune our PID gains is past data: no knowledge of the plant. It also proposes to do so in realtime, that is, alongside control. My previous idea was to run series of tests in the simulaiton and walk a simplex along an evaluation that occurred in some set of time (say 10s of balancing), with an accumulated error as a metric. The method here would likely be more of a wonderful-tool-belt item for control generally, but is less related to my plans.

There are more, but I need to reestablish my VPN into MIT to access,

[Automatic Tuning of Optimum PID Controllers](https://digital-library.theiet.org/content/journals/10.1049/ip-d.1993.0030)

[Automatic Tuning and Adaption for PID Controllers - a Survey](https://www.sciencedirect.com/science/article/abs/pii/096706619391394C)

## 'UKX' Control

TODO: better explainer, mathjax.

So, pid seeming like a black hole of 'OK' success and all, I did the background learning on state space control and LQR, finding that the *result* of LQR optimization is the generation of an ideal 'K' matrix, which is a control law: meaning that if we have a state matrix 'x' - in this case this is our x position, xdot (speed), theta (angle) and tdot (angular velocity), we have a simple matrix K (aka a 'gain matrix') that we multiply with out state matrix (vector? words) to get some 'u' - our control output. In some sense this is *like* PID control, but it operates on system state, not an error... Interestingly (to me), this means we control around the entire state - here I can write laws that will *also* bring the x-position back to center.

I've formulated this into my simulated controller, and by hand tuned the K gains, resulting in control that looks like this:

This is satisfying, and is *much* smoother than the PID approach. Since I am able to get this close by hand I have good faith that I can write some simplex to search over simulations to find an ideal K matrix, instead of using LQR (which requires more detailed knowledge of the system of eqns that characterize the system). That's next.

## Search for Gain Matrix

Discussion: how did you search? over gaussian set of starting conditions for set time period. What went wrong? finds many local minima. tried momentum (helps) and tried knocking small simplexes into larger volumes. Neither finds their way out of bad starting conditions. Tried modifying error metrics, sample length, starting condition distributions, to no avail.

... one more video

## Continuous Simplex

## The Stepper Driver

I want to build a new stepper driver (code) for this. Currently, my driver & hardware pair has these settings, maxed:

| Setting | Units | Value | Alt Units | Value |

| --- | --- | --- | --- | --- |

| steps / mm | - | 231 |

| acceleration | mm/s^2 | 30 | m/s^2 | 0.03 |

| top speed | mm/s | 20 | m/s | 0.02 |

Although it would seem as though I've made some mistakes in implementation, because this seems pitifully small in terms of 'Gs' - but observed acceleration seems to be near or beyond 1G.

- first, cleanup existing code

- steps/unit as a float,

- underlying state / operation same as current, but understand and document limits

- stepper becomes responsible for acceleration, watch world units, timers

- stepper should become responsible for limits: I want to bake safety into hardware. as a result, it will also be master of its own absolute position.

- to start down the new path, I'll roll it up to accept acceleration commands, in world units `m/s^2`

- eventually I'll want to bundle data packets... RIP msegs

## The Pendulum Hardware

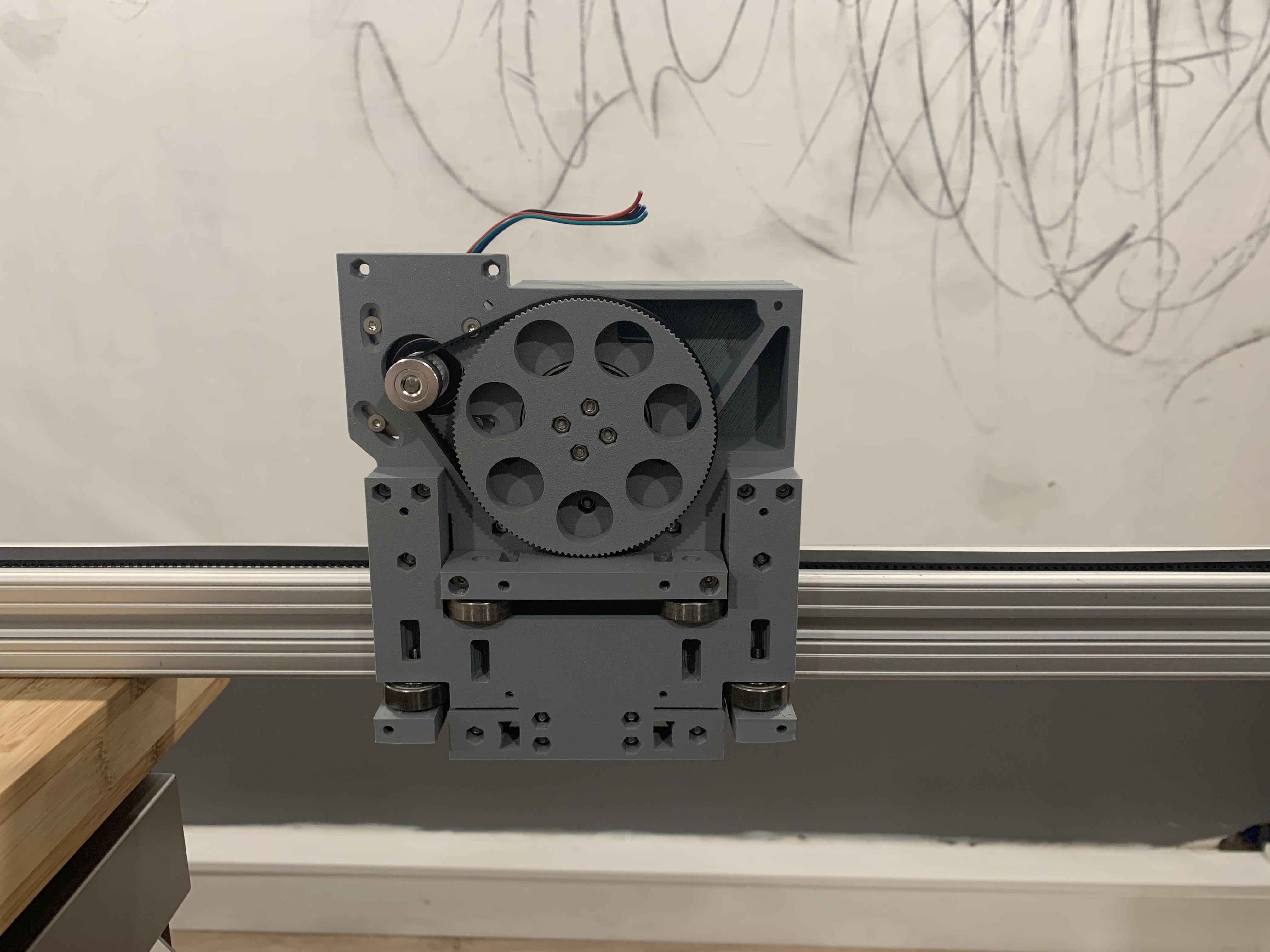

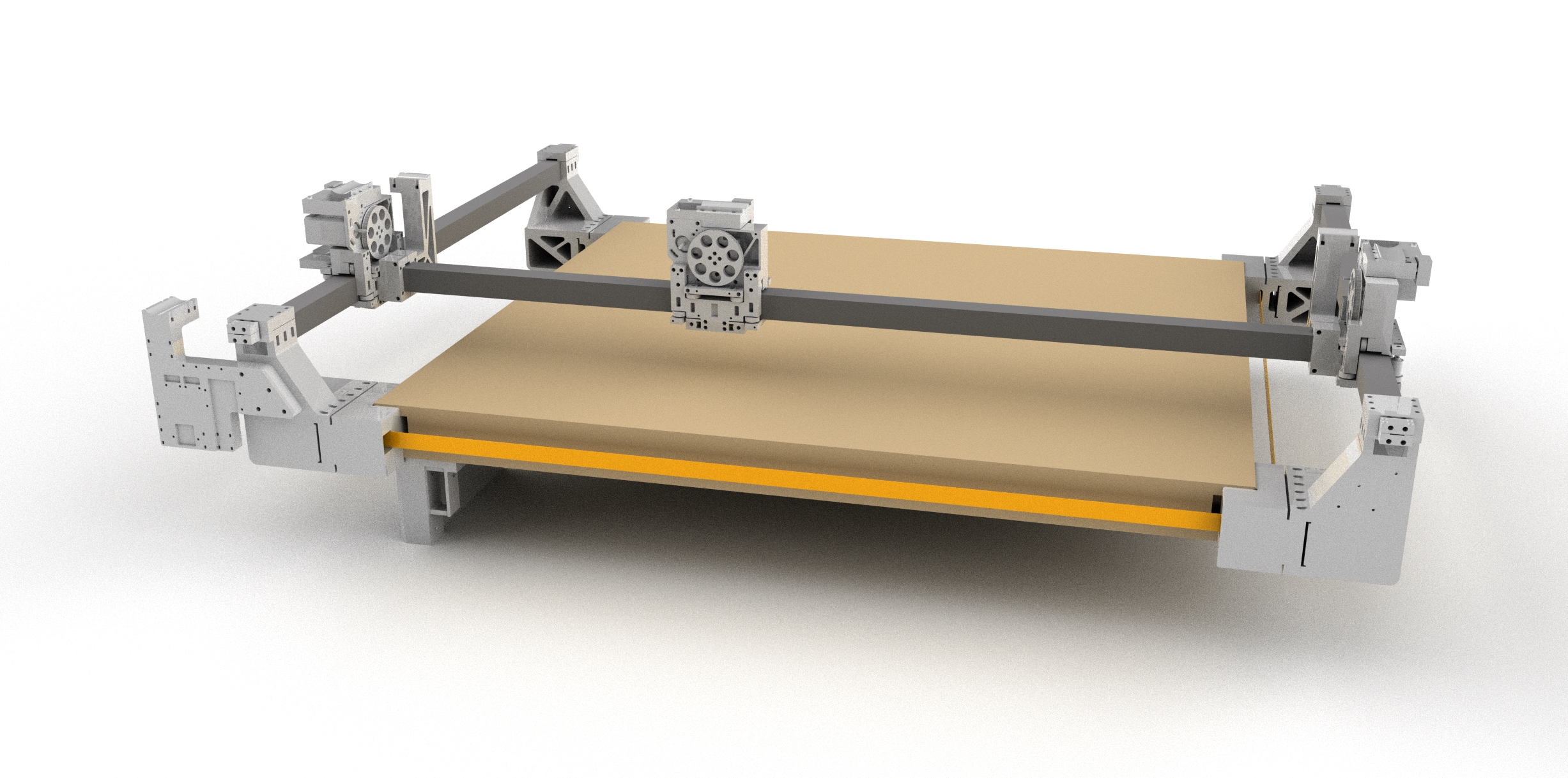

I'm planning on using [this axis type](https://gitlab.cba.mit.edu/jakeread/ratchet) as the 'cart' for the pendulum project. This is part of a larger machine project I am working on in the mean time.

This might prove to have some limits: these are designed to accelerate at a decent clip, but not hit huge top speeds. The 6.5:1 reduction there really kills and speed desires. I think I'll get through the simulation, get a sense of what kinds of accelerations and speeds are required, and revisit this if it's necessary.

There's some CAD and sensor reading here, as well - I'll need to make / print a pendulum bearing / encoder situation, write an encoder driver, and then very likely should filter that encoder data to build a state estimator for its current position.

## Software Architecture

One of the real puzzles here - and I hope this will unlock some secrets for future, more general purpose control of machines - is how to handle the relationship between realtime-worlds (embedded code and *genuine* physics) and asynchronous worlds - like the javascript / networked runtime and simulation.

My suspicion is that *sourcing* time from the lower levels is the move, i.e. track state / time advancements from the bottom, and somehow trickle those up.

## The Actual Control

Here's where I know the least... again, starting point is certainly the simulation, with keyboard inputs to play with. I hope to learn from the simulation:

- what accels, what speeds are necesssary for success (human controller)

- above, w/ relationships to pendulum weight / length,

From there, I'd also like to plot the phase space of the system. I have some idea that one control strategy would be to run a kind of 'path planning' inside of this space... if any point in the space represents a particular state, plot a course to the state we are interested in being at.

I should also do some lit review, that should start here.

[youtube pendulum 1](https://www.youtube.com/watch?v=XWhGjxdug0o)

### Swing Up and Balancing

I imagine I'll start with balancing, as swing up seems to require some next level magic. In [the first video above](https://www.youtube.com/watch?v=XWhGjxdug0o), these are two distinct controllers!

### Learning

The idea is to craft a best-practices-and-all-the-priors model and controller first, and *then* start cutting pieces out to replace them with learning / search.

# Log

## 2020 05 18

Well, this is still a bit of a useless hunk. Search (when given decent starting conditions) with simplex does seem to walk uphill for a minute, but quickly settles into some local minima. There's a few things:

### Much Larger Sample

My first task this morning is to sample from a big ol' data set. With 10 gaussian samples, seems to already work much better. I'll try 100, 1000, and then try assering speed limits and use-of-accel penalties.

On a gaussian sample size of 100, 10s simulations with random start conditions this is honest to god starting to work, although it still has lots of help from the starting conditions. I should put a video in here... and maybe first test truly random startup vals.

This is ... better, but still not great. It does terribly when it isn't given the right quadrant to start operating in.

Well, I think I can conclud that this approach is fraught with local minima issues. I'm going to stash this an move on to a continuous simplex experiment.

As a last ditch experiment, I'll say when things hit a minima, I do a big expand on all fronts. Oh damn - when I spread I need to re-evaluate everything. Dur.

Yeah, still finds these local minima

### Continuous Simplex (much smaller sample)

If / or even if not / these fail, I'm curious about a continuous search. since the issue is about smoothness in the evaluation, this might actually make more sense. so, let's say we have a set of pts that we are tracking, parameters. At beginning of each step, we have some simulation state. At each step in the simulation, we evaluate what-would-be next steps, at each of our simplex points, and otherwise run simplex, finding a new best-point. We use this best point to update the simulation, and proceed, next time running new evaluations of all possible next- steps, etc.

This would be an odd one, but would be neat if it worked.

And it means I need to write simplex in-line with the simulation...

So! I'll mash these together...

Mashed. Does not work. Perhaps the move is to write a different kind of simplex. At each simulation step, with a gain-set centered around the last-used, write a grid surrounding it, at a fixed size. Evaluate all of these, pick the best. Thing won't be subject to shrinking into a local minima for one state, then failing when state changes.

### Continuous, Fixed-Step K-Gains Search

OK, this actually seems like it works.

- can it walk out of bad starting kvals ? (yes)

- what is it sensitive to?

- projection time-span

- error percentages: from pos. or from angle?

- move gen. size

Here's a few trial runs from where I first had this woken up, running with a 1500ms horizon:

When the horizon is smaller, this favours aggressive balancing moves, but ignores drift in position. Here is is running on a 500ms horizon:

Finally, I want to try perturbing this thing... I'll add some keystrokes to knock angular velocity into it.

This is it for now. I'll write everything up and speculate on future work:

- more intelligent walking / step generation, and settling down when locked in.

- alignment to hardware, imagination in software

- relationship between forecast size and system time constant

- appropriateness for control: these are not ideal controllers, we want systems to be deterministic. over time, if conditions become 'normally' stable, small perterbations lead to big problems - try simultaneously building a map of good control states walked in previous traverses through that state, maybe fit some smoothness around these states, jump to previously-known best control laws when state is perturbed into these positions

### Finishing the Assignment

- write more about LQR, try to understand

- plot / quantify simplex success on rosenbrock

## 2020 05 17

Before proceeding, I need a better plan. The original question was about how much of existing control I could replace with search. Now that I'm two days away, it is perhaps apparent to me that I will not likely learn enough about current controller to do this, and then pare out results into search methods. First, I should try to learn what I can about pendulum controllers, they're well studied and perhaps I *can* easily implement best state of the art control.

The curiousity that grew out of this was about using simulation in free-time-land to 'imagine' many possible controllers, while simultaneously using some small learning method to adapt that simulation's phyiscal parameters to a real-world object. Then, use the free-time to evaluate many more controllers than would be reasonable to test in the world, and loop those results back. This doesn't answer the original question about how much control we can replace with search, but invents an architecture to quickly tune real systems using computer 'imagination' matched to reality.

[Cart: 1: Setup](https://www.youtube.com/watch?v=qjhAAQexzLg)

[Cart: 2: Pole Placement](https://www.youtube.com/watch?v=M_jchYsTZvM)

[Cart: 3: LQR](https://www.youtube.com/watch?v=1_UobILf3cc)

For control.

- I think I am missing some masses in my simulation: the pendulum

- State Space is o, odot, x, xdot

- want a control that, given full state, computes a new xddot

- I'm just not sure about this transform, it looks like maybe there are just four values to search for, that multiply that state space variable to produce an output. it would be rad to just search for those.

- u = -Kx, where u is the control output, K is the control matrix, x is the state. just search for K. too easy? 'gain matrix K'

- re-watch these videos:

```

is

K

0 1 2 3

and x

0

1

2

3

such that Kx = shape(1,1) ?

```

This would be great because it just means we could, for a set of state, search for gains per each, to make a control law, regardless of whether we know really the full derivation of the system.

Yeah, I'm pretty confident this is the shape of it. To test that out... I'll git-push the current PID controller, or maybe fork the hunk. Whoah. Nice. Then I'll reformat to have a state vector, a gains vector, and see what tooling that around is like / if it does anything remotely desireable.

OK, this is wicked sick, broh. I get to control for full state: what x, xdot, o, and odot that I want... this one centers itself also. Bless bless. So this is what I'll search over... and I've even been able to write a control law by hand, that's [1,2,-100,-100] so this is obviously tractable.

Now the curiousity is how to do function evaluation, to search for ideal control laws. It would be great to be able to do this all the time, improving the control law at each step. To get a sense of error metrics, I should instrument this version with an output of some error metric... at each step, and cumulative. This is pretty oscillatory:

Meaning that I really should be evaluating over some fixed time, a sum of performance. My last question would be about starting conditions: if in each 'run' I start with random conditions, performance will vary given the random seed as well. I will be drawing function evaluations for the simplex from different landscapes at each turn. To combat this, I should either used fixed conditions, which doesn't sound ideal, as it will optimize for that condition only. I can run the same simulation from a set of diverse starting conditions, but repeated - which would be uniform, but time consuming and still not completely optimized around the whole set - or I could run from a sample of 10 or so random conditions drawn from a gaussian set and assume some semblance of smoothness around those.

Whatever it is, to start I need mechanics for pushing k-vals out of a search algo, into the simulation, and returning errors from a run of those vals.

Wow, headless (no drawing) this is wicked fast, I can run 10k timesteps somewhere between 0 and 1ms. This will make search vastly easier. So to start I'll run the same starting condition for 10 seconds (10k steps), and return a cumulative error. If I make-modular the function eval, I can use the same thing to evaluate and to render with...

Searching is all running, but can't find the right gain values, given a random start. To sanity check, I can give this thing the rosenbrock function, and see if simplex finds 1,1,1,1 there.

It does, beautifully. I could try reducing the search space: just two k-vals, for x-pos and y-pos.

Even when I set starting conditions to be of the right sign, this struggles to find good controllers... *and* they fail on sides-to-start that aren't the condition they were trained on: that makes sense.

To sanity check my errors, I'll plot those out from the simulation, so that I understand that that's working. Those also check out.

To make this tractable for more starting conditions, I'll adjust my receipt-of-new parameters to return results from four starting conditions. While I cook I might just set it off to run forever.

Some momentum seems to do wonders, but these are hardly optimal and I'm still hand-holding with the initial parameters. This isn't useful yet. Might try enforcing some minimum spread...

## 2020 05 16

OK, here we go. PID is step one. Did that.

I'm trying to plot error, and error derivative. Trouble with flow control into the chart... strict flowcontrol is tricky, espcially when things are gated on one another. Might want to have a mechanism to clear previously-put tokens... hmmm

I really expect / hope to show that this expands to hardware, I'd be more pleased by a hardware-in-the-loop system, where I use 'realtime' alignment to a known simulation, and a simultaneous simplex search for PID parameters, and in turn update those to the realtime thing... this is probably cool as well because simplex should adapt (?) to a changing evaluation function (i.e. it's searching the simulation: the simulation is being adapted to suit the real world)... could be neeeeat

## 2020 05 06

I expect the *result* of the control-with-search experiment will be that success does not look like replacing the control algorithm, but with searching for parameters in the simulation used to to prediction. At least - that aspect in realtime. Since time for simple systems becomes essentially free when we simulate them, we can use these simulations to search for controllers over thousands of 'epochs' - then we just have to plug them into our physical systems ... their 'digital twins' - so, the pain there is in aligning system parameters. However, prediction here works on a much faster time step: we have the last-state, and a prediction of the next state, given our simulation. We also have a physical equivalent of this. Say we just input control variables open-loop into the physical system, read its state, and (we are potentially doing this near or upwards of 1kHz), work to match *our* predicted next-ms-state to the system's real next-ms-state. So we can do fast simulation / physical alignment, and then lean on the free time in the simulation to learn higher order control. Perhaps, or likely, that a *good* implementation of these mixes both, so that it's always doing both - we can imagine the computer sort of every-so-often receiving 'real' data, using that to check / match its model against the world, and then (in the remaining cycles inside of the ms: 4 000 000 on a 4Ghz clock), continue to imagine possible futures / trajectories / control parameters. OK.

## 2020 04 05

I've turned all of my hardware and frameworks back on after ~ 1 mo away from code, so I'm just note taking on what my steps are for this thing, and some other observations.

I figure I should first build this virtually, in simulation, and then attach that to hardware. Since it's appropriate, I'll also try to take this opportunity to ~~break~~ explore some of the architecture decisions I made while I was building [squidworks](https://gitlab.cba.mit.edu/squidworks/squidworks), which I am ready and excited to revisit.

I should keep things simple and move fast, so as much as possible I'll retain most of squidworks' function. To start, this should just mean (1) writing ahn hunk that runs the simulation, and does control, and (2) writing a new stepper driver.

- current acceleration control is not cool enough for this project. also, limits for the same should be found downstream at the motors. motors have no guarantee that they have a faithful master, should protect themselves. how to find and auto config all of this?

- current stepping code is also sort of limited. I figure I should roll new, simple codes that begin with running accleration, and maybe even track position state at the endpoint as well - lets build from the bottom up here, since remote states are always a PITA. I like the thought that in the future, states are queried-only, so we can put heavy stuff like position in these areas

I am into this last point. I want a stepper driver that knows (1) where it is, (2) what its limits are, and then (3) can accept accelerations as inputs. It should be dampening; when there is no requested accel, or it's not near an edge (that it cannot deccel into), it deccelerates. This will put enough intelligence into the motor that I can build other APIs on top of it, which hopefully will end up looking like some kind of bussed - and - timestamped - moves to make... ?

I have some code cleanup to run first, I think. First would be rebasing scuttlefish into cuttlefish. Then, since I'm going to break the stepper otherwise, I should branch ponyo.

OK, done with that... and notes here... I suppose the simulation is my first order, as it's a javascript world for me for a few minutes, some chance that I might wrap on new protocol stuff before I get too far into this, it would be cool to use this to push all of that.